Section 230: A Primer

Posted 12/13/2025

Senator Whitehouse (D, RI) announced that he’s moving to file a bill to repeal Section 230, the singly most important piece of Internet legislation, which goes on the chopping block a few times a year. While Section 230 is flawed, repealing it without any transition plan in place would be a civil rights disaster. Many have written articles about it. This one is mine.

Who is responsible for the Internet?

The core question is who is legally liable for content posted online, and what responsibility do social media platforms carry for the content they distribute? We have two analogies to draw from:

-

A newspaper. When a newspaper publishes an article, they bear legal responsibility for distributing its contents, and can be sued for libel. Even if the article is written by someone outside the paper, the newspaper editor chose to print the article and so accepts some responsibility for its dissemination.

-

A community tack board at your local coffee shop. Anyone can walk in and tack up whatever they want on the board. The coffee shop owner does not immediately bear liability for what members of the public have put up on the wall, and shouldn’t be sued because a patron tacked up a hateful or libelous message.

So which category does the Internet fall into? When someone posts a video or comment on YouTube, Instagram, Facebook, and so on, does the platform bear responsibility for distributing that content to others?

Before 1996 the legal standard used to rest on whether the platform exerted editorial control. If the platform had content moderation, then they chose what to remove from their platform, and implicitly what to leave up. If the platform had no moderation whatsoever then they could claim similar immunity to a coffee shop as passive facilitators. This meant that Internet service providers were legally in the clear - they fall in the same category as phone companies or the postal service in that they facilitate your communication without being a publisher - while forums and chat rooms were treated more like newspapers. As the Internet grew, this position became untenable: a human moderator can’t approve every Tweet and YouTube video before they go live, and these services can’t exist if social media companies accept legal liability for what every user posts online.

Enter Section 230 of the Communications Decency Act of 1996, which clarifies:

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

In short, platforms aren’t responsible for what their users post. There’s a short list of exceptions - platforms are required to take down material violating federal criminal law, copyright restrictions, or human trafficking law. Online services that exist to facilitate illegal transactions are also still vulnerable, so an online drug market can’t claim that they’re not responsible for all their users buying and selling drugs. But in general, this law allows social media to exist, allows any user-submitted online content to exist, and allows platforms to enact content moderation without automatically claiming legal liability for everything they host.

What’s wrong with 230?

A common critique of Section 230 is that it goes too far: platforms bear very little responsibility for the content they host, even if that content is hateful or harmful. For example, it is exceedingly difficult to sue Roblox for endangering children, even though it’s well documented that predators use their game platform to groom and harm children, because they aren’t responsible for what their players post. It is likewise very difficult to hold Meta accountable even when their own research showed that their platforms were dangerous for young people.

While Section 230 means that platforms can moderate content as they see fit, it also means that they don’t have to. The only real incentive platforms have to moderate content at all are market incentives: if a social media platform becomes a vile cesspool of hate and harassment, users may eventually flee to greener pastures that promise to keep out the Nazis. Unfortunately, switching costs are high: if I leave Twitter, I lose all my Twitter follows and followers. Even if those users have also migrated to Mastodon or Bluesky, there is not an easy way to re-establish those relationships en masse. So things have to get pretty bad for a critical mass of users to leave a platform, meaning in practice there is little pressure for the big players to moderate anything at all.

As we face more misinformation, more hate and harassment, and more AI slop online, it’s understandable that people cry out for the government to do something to pressure companies and improve our online spaces.

Why do we still need 230?

Unfortunately, simply repealing Section 230 would immediately destroy the Internet as we know it. Social media platforms like Reddit, Facebook, TikTok, YouTube, and Twitch could not exist. Collaborative writing projects like Wikipedia could not exist. Services like reviews on Google Maps or Yelp or Amazon could not exist, nor could Patreon or Kickstarter - any service where users contribute content could only continue to operate if a company moderator first examined the content to be sure the company wouldn’t be taking on unacceptable legal risk by distributing it. A blog like mine could survive because I don’t have a commenting system at time of writing, but that’s as close as we’d have to the Internet we know.

Technically, these platforms could continue to operate without any moderation, arguing that they’re just a passive conduit for users rather than publishers. However, given the sheer number of spam bots online, removing all moderation would also doom online platforms to collapse.

Why does this keep happening?

Every time there’s a new story about teen self-harm, or mass shooters radicalized online, or a range of other atrocities, politicians are pressured to hold social media accountable. Republicans in particular would like to target social media platforms for “censoring right-wing voices,” a largely baseless conspiracy that is impossible to pursue because companies are free to moderate content as they see fit. While social media companies usually push back against attempts to repeal 230, some larger companies see an advantage in “pulling up the ladder;” operating an army of content moderators would be exceedingly expensive for Facebook, but impossible for its smaller competitors, solidifying a permanent monopoly.

Is there any alternative?

Not an easy one. We don’t want to hold platforms legally liable for everything that users post, whether through exposing them to lawsuits or through some draconian government censorship board. For all the many flaws of social media, it is also an outlet for political and cultural expression, a platform for organizing and spreading information outside of traditional corporate media publishing, and a means of finding and building community with others. Those are ideals worth fostering.

In my opinion, one of the best courses of action is to build online platforms that are not profit-driven. A profit incentive implies keeping moderation costs down, and keeping engagement up to drive advertising revenue. This can lead (often unintentionally!) to dark patterns like encouraging radicalization and ‘hate-scrolling,’ because seeing more extreme and angry content keeps people watching. These incentives encourage lax moderation that won’t drive away users and inhibit ad money. By contrast, a community self-run platform like Mastodon has no advertising, no recommendation algorithm, and no incentive structure except wanting to provide a community space. Self-organized social media brings a host of other challenges related to content moderation and sustainable finances, but these seem to me to be more approachable than curtailing the behavior of Meta or Elon Musk through changing federal law.

RPI’s Vaccine-Autism Study

Posted 9/23/2025

The Centers for Disease Control and Prevention intend to award a no-bid contract to Rensselaer Polytechnic Institute to study whether childhood vaccination induces autism. I studied at RPI through my bachelors and masters degrees, and like many alumni, I am incensed. On September 15th I wrote the following letter in protest:

Dear Dr. Schmidt, Board of Trustees, and Deans,

I am a proud alumnus of Rensselaer (B.S. 2018, M.S. 2020), and credit the institute with shaping me as a professional scientist. I write today with grave concern about Rensselaer’s proposed contract with the CDC to study links between vaccinations and autism 1.

Rensselaer’s commitment to knowledge and thoroughness is well-known. However, the link between vaccines and autism, first proposed in a now-retracted 1998 paper 2, has been conclusively investigated and discredited 3 4 5. Scientific consensus is clear, and further studies are not warranted. Instead of providing certainty, Dr. Juergen Hahn’s proposed work will provide legitimacy to vaccine skeptics by suggesting that a causal link between vaccination and autism is even conceivable.

Vaccine hesitancy is on the rise globally, in part driven by perceived risk of vaccination 6. In contributing to this hesitancy, Rensselaer will be complicit in decreasing vaccination rates, a return of once-defeated diseases like measles, and ultimately, unnecessary suffering and early deaths. I urge the institute to reconsider their CDC contract, for the good of humanity and their own reputation.

Respectfully,

Dr. Milo Z. Trujillo

My letter was quoted by the local paper, and other alumni have sent their own letters, started a phone calling campaign, and circulated a petition. I am not optimistic that our efforts will sway RPI’s administration against the value of their CDC contract, but I believe it is imperative to try. After President Trump’s press release asserting autism is caused by Tylenol and vaccines, we’re likely to see greater drops in childhood vaccination. Scientists that entertain the thoroughly dismissed vaccine-autism link are fueling the flames and legitimizing Trump’s claims, and children will suffer and die as a result.

-

Tyler McNeil. “CDC to award RPI a contract for vaccine-autism research”. In: Times Union (Sept. 14, 2025). url: https://www.timesunion.com/news/article/cdc-contract-vaccine-autism-research-rpi-21047511.php (visited on 09/15/2025). ↩

-

Andrew J Wakefield et al. “RETRACTED: Ileal-lymphoid-nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children”. In: The Lancet 351 (9103 1998), pp. 637–641. doi: 10.1016/S0140-6736(97)11096-0. ↩

-

Frank DeStefano. “Vaccines and autism: evidence does not support a causal association”. In: Clinical Pharmacology & Therapeutics 82.6 (2007), pp. 756–759. ↩

-

Anders Hviid et al. “Measles, mumps, rubella vaccination and autism: a nationwide cohort study”. In: Annals of internal medicine 170.8 (2019), pp. 513–520. ↩

-

Luke E Taylor, Amy L Swerdfeger, and Guy D Eslick. “Vaccines are not associated with autism: an evidence-based meta-analysis of case-control and cohort studies”. In: Vaccine 32.29 (2014), pp. 3623–3629. ↩

-

Eve Dubé et al. “Vaccine hesitancy: an overview”. In: Human vaccines & immunotherapeutics 9.8 (2013), pp. 1763–1773. ↩

Word Prominence: A Primer

Posted 6/9/2025

A common task in natural language processing is determining what a text (a book, speech, tweet, email) is about. At its simplest, we may start by asking what words appear most prominently in a text, in the hope that they’ll be topic key-words. Here “prominence” is when a word appears disproportionately in this text compared to others. For example, “the,” “a,” and “and” will always appear with high frequency, but they appear with high frequency in every text and are uninteresting to us.

Our approach will start by reducing a text to a list of words and a count of how many times they occur, typically referred to as a bag of words model. This destroys sentence structure and context, a little like burning an essay and sifting through the ashes, but hey, it keeps the math simple, and you have to start somewhere.

For all of the techniques outlined below we’ll need two texts: the text we’re interested in studying, and a “baseline” corpus of text for comparison.

TF-IDF

Our first contender for identifying keywords is term-frequency inverse-document-frequency or TF-IDF. For each word in our text of interest we’ll first measure the frequency with which it occurs, or literally the number of times the word appears divided by the total number of words in the text. For example in Python, if t is a particular term and d is a dictionary of words and how many times they appear, then:

def tf(t, d): total = sum(d.values()) return d[t] / total

Then we’ll examine a number of other texts for comparison, and count how many of those texts contain the target word. Formally the inverse document frequency is defined as:

Or in Python, if D is a list of documents (where each document is a dictionary of terms and how many times they appear, as in tf):

def idf(t, D): total = 0 for d in D: if( t in d.keys() ): total += 1 return math.log(len(D) / total)

This means if a term appears in all N documents we’ll get idf(t, D) = log(N/N) = 0, and the fewer documents a term appears in, the higher its IDF score will be.

We can combine term-frequency and inverse-document frequency as simply term-frequency times inverse document frequency, or:

Now terms that appear in many documents will get a low score, and terms that appear in the target document with low frequency will get a low score, but terms that appear with relatively high frequency in the text of interest and appear in few other documents will get a high score.

What does this get us in practice? Well, to borrow Wikipedia’s example, we can compare every play by Shakespeare (I grabbed the Comedy, History, and Tragedy selection from here). Here are the top terms in Romeo and Juliet, sorted by their TF-IDF scores:

| Word | TF | IDF | TF-IDF |

|---|---|---|---|

| romeo | 0.0097 | 3.6109 | 0.0350 |

| juliet | 0.0057 | 2.9178 | 0.0166 |

| capulet | 0.0045 | 3.6109 | 0.0164 |

| mercutio | 0.0028 | 3.6109 | 0.0100 |

| benvolio | 0.0025 | 3.6109 | 0.0091 |

| tybalt | 0.0024 | 3.6109 | 0.0086 |

| laurence | 0.0024 | 2.9178 | 0.0070 |

| friar | 0.0031 | 1.5315 | 0.0047 |

| montague | 0.0013 | 2.9178 | 0.0039 |

| paris | 0.0018 | 1.4137 | 0.0026 |

| nurse | 0.0046 | 0.5664 | 0.0026 |

| sampson | 0.0007 | 2.9178 | 0.0019 |

| balthasar | 0.0006 | 2.5123 | 0.0014 |

| gregory | 0.0006 | 2.0015 | 0.0012 |

| peter | 0.0009 | 1.3083 | 0.0012 |

| mantua | 0.0004 | 2.5123 | 0.0011 |

| thursday | 0.0004 | 2.5123 | 0.0011 |

The top terms in the play by term frequency are “and,” “the,” and “I,” which each appear about 2% of the time - but these terms all have IDF scores of zero because they appear in every play, and so receive a TF-IDF score of zero as well.

However, only one play includes “Romeo.” This makes up almost 1% of Romeo and Juliet - very common, the twelfth most common or so word - but top of the list by TF-IDF standards.

TF-IDF isn’t perfect - it’s identified many of the names of characters, but also words like “Thursday” and “Paris” (not the setting, which is Verona, Italy) that aren’t especially central to the plot. Nevertheless, TF-IDF is impressively effective given its simplicity. So where does it really fall flat?

The biggest shortcoming of IDF is that it’s boolean: a term either appears in a document or not. However, in large corpora of text we often require more nuance than this. Consider trying to identify what topics a subreddit discusses by comparing comments in the subreddit to comments in other subreddits. In 2020 people in practically every subreddit were discussing COVID-19 - it impacted music festivals, the availability of car parts, the cancellation of sports games, and had some repercussion in almost every aspect of life. In this setting Covid would have an IDF score of near zero, but we may still want to identify subreddits that talk about Covid disproportionately to other communities.

Jensen-Shannon Divergence

Jensen-Shannon Divergence, or JSD, compares term frequencies across text corpora directly rather than with an intermediate “does this word appear in a document or not” step. At first this appears trivial: just try tf(t, d1) - tf(t, d2) or maybe tf(t, d1) / tf(t, d2) to measure how much a term’s frequency has changed between documents 1 and 2.

The difference between term frequencies is called a proportion shift, and is sometimes used. Unfortunately, it has a tendency to highlight uninteresting words. For example, if “the” occurs 2% of the time in one text and 1.5% of the time in another text, that’s a shift of 0.5%, more than Capulet’s 0.45%.

The second approach, a ratio of term frequencies, is more appealing. A relative change in frequencies may help bury uninteresting “stop words” like “the,” “a,” and “it,” and emphasize more significant shifts. However, there’s one immediate limitation: if a term isn’t defined in d2 then we’ll get a division-by-zero error. Some frequency comparisons simply skip over words that aren’t defined in both corpora, but these may be the exact topic words that we’re interested in. Other frequency comparisons fudge the numbers a little, adding 1 to the number of occurrences of every word to ensure every term is defined in both texts, but this leads to less mathematical precision, especially when the texts are small.

Jensen-Shannon Divergence instead compares the frequency of words in one text corpus against the frequency of words in a mixture corpus M:

Here, M is defined as the average frequency with which a term appears in the two texts, or M=1/2 * (P+Q). This guarantees that every term from texts P and Q will appear in M. Additionally, D refers to the Kullback-Leibler Divergence, which is defined as:

The ratio of frequencies gives us a measure of how much the term prominence has shifted, and multiplying by P(x) normalizes our results. In other words, if the word “splendiforous” appears once in one book and not at all in another then that might be a large frequency shift, but P(x) is vanishingly small and so we likely don’t care.

Note that JSD is defined in terms of the total amount that two texts diverge from one another. In this case we’re interested in identifying the most divergent terms between two texts rather than the total divergence, so we can simply rank terms by P(x) * log(P(x)/M(x)). Returning to our Romeo and Juliet example, such a ranking comparing the play to Othello (made by the Shifterator package) might look like:

Jensen-Shannon Divergence can find prominent terms that TF-IDF misses, and doesn’t have any awkward corners with terms that only appear in one text. However, it does have some limitations:

-

First, JSD is sensitive to text size imbalance: the longer a text is, the smaller a percentage each word in the text is likely to appear with, so measuring change in word prominence between a small and large text will indicate that all the words in the short text have higher prominence. To some extent this problem is fundamental - you can’t meaningfully compare word prominence between a haiku and a thousand-page book - but some metrics are more sensitive to size imbalances than others.

-

Second, KLD has a built-in assumption: frequency shifts for common words are more important than frequency shifts for uncommon words. For example, if a word leaps in frequency from 0.00005 to 0.00010 then its prominence has doubled, but it remains an obscure word in both texts. Multiplying the ratio of frequencies by

P(x)ensures that only words that appear an appreciable amount will have high divergence. What’s missing is a tuning parameter: how much should we prefer shifts in common terms to shifts in uncommon terms? Should there be a linear relationship between frequency and how much we value a frequency shift, or should it be an exponential relationship?

These two shortcomings led to the development of the last metric I’ll discuss today.

Rank-Turbulence Divergence

Rank-Turbulence Divergence is a comparatively new metric by friends at the Vermont Complex Systems Institute. Rather than comparing term frequency it compares term rank. That is, if a term moves from the first most prominent (rank 1) to the fourth (rank 4) that’s a rank shift of three. In text, term frequency tends to follow a Zipf distribution such that the rank 2 term appears half as often as the rank 1 term, and the rank 3 term a third as much as rank 1, and so on. Therefore, we can measure the rank as a proxy for examining term frequency. This is convenient, because rank does not suffer from the “frequency of individual terms decreases the longer the text is” challenge that JSD faces.

Critically, we do need to discount changes in high rank (low frequency) terms. If a term moves from 2nd most prominent to 12th most prominent, that’s a big change. If a term moves from 1508th most prominent to 1591st, that’s a little less interesting. However, instead of multiplying by the term frequency as in KLD, Rank Turbulence Divergence offers an explicit tuning parameter for setting how much more important changes in low rank terms are than high rank.

For words that appear in one text but not another, rank turbulence divergence assigns them the highest rank in the text. For example, if text 1 contains 200 words, but not “blueberry,” then blueberry will have rank 201, as will every other word not contained in the text. This is principled, because we aren’t assigning a numeric value to how often the term appears, only truthfully reporting that words like “blueberry” appear less than every other term in the text.

The math at first looks a little intimidating:

Even worse, that “normalization factor” is quite involved on its own:

However, the heart of the metric is in the absolute value summation:

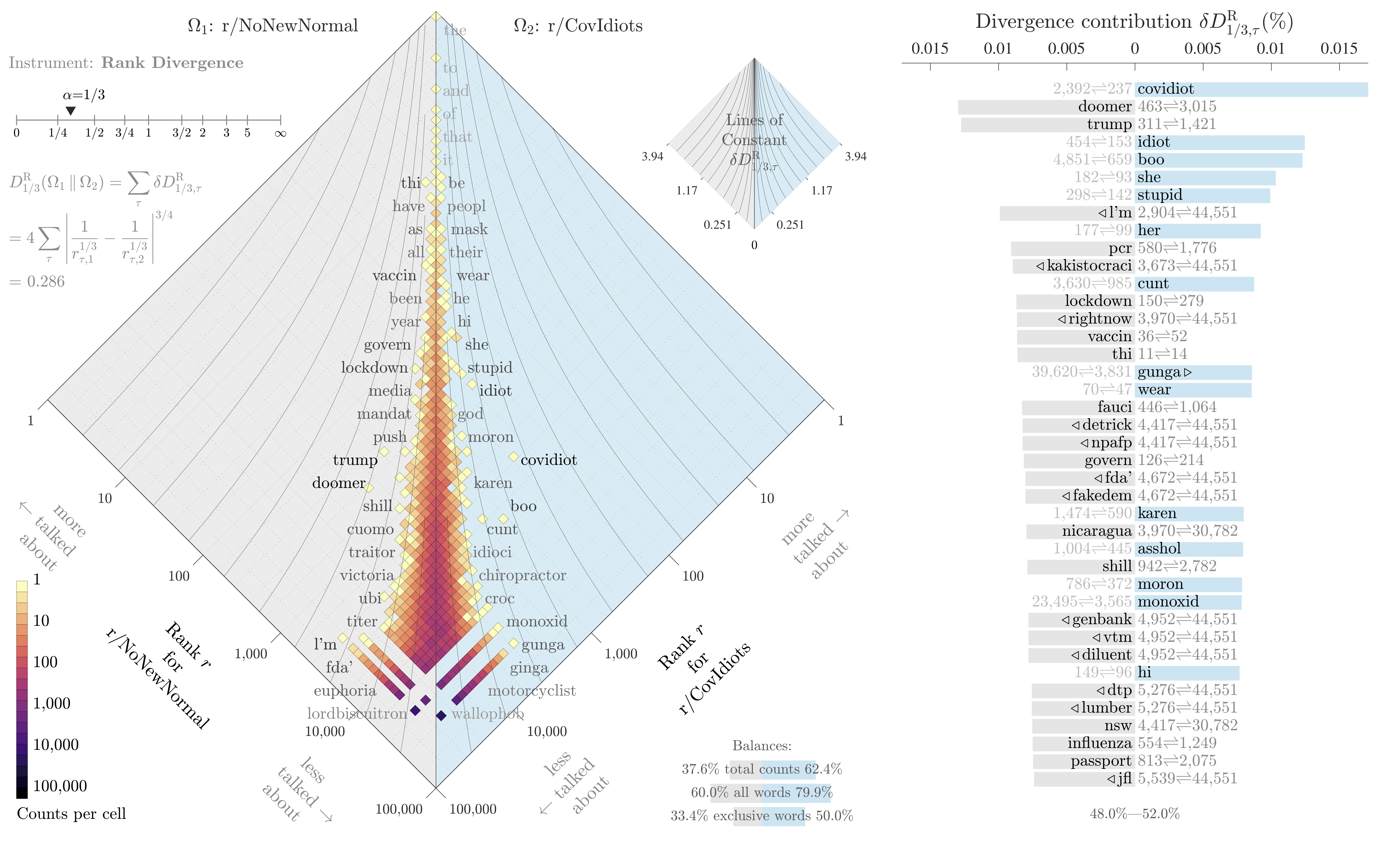

If all we want to do is identify the most divergent terms in each text, and not measure the overall divergence of the two systems, then this snippet is enough. It also builds our intuition for the metric: all we’re really doing is measuring a change in inverse rank, with a knob to tune how much we emphasize changes for common words. The knob ranges from 0 (high and low ranked terms are equally “important”) to infinity (massively emphasize shifts in common words, ignoring shifts in rare words). Here’s an example plotting the difference in words used on two subreddits, r/NoNewNormal (which was a covid conspiracy group) and r/CovIdiots (which called out people acting foolishly during the pandemic):

The “divergence contribution” plot on the right can be read like Shifterator’s JSD plot, and simply lists the most divergent terms and which text they appear more in. The allotaxonometry plot on the left reads like a scatterplot, where one axis is the rank of words in r/NoNewNormal and the other axis is the rank of words in r/CovIdiots. When words have the same rank in both texts they’ll appear on the center line, and the more they skew towards one subreddit or another the further out they’ll be plotted. Only a small subset of notable words are explicitly labeled, and the rest of the plot operates more like a heatmap to give a sense of how divergent the two texts are and whether that divergence starts with common words or only after the first thousand or so.

The plot highlights r/NoNewNormal’s focus on lockdowns, doomerism, and political figures like Trump and Fauci, while CovIdiots has a lot more insulting language. The plot correctly buries shared terms like “the” and common focuses like “covid”. That plot comes from one of my research papers, which I discuss in more detail here.

Conclusions

This about runs the gamut from relatively simple but clumsier metrics (tf-idf, proportion shifts) to highly configurable and more involved tools (RTD). The appropriate tool depends on the data - are there enough documents with enough distinct words that tf-idf easily finds a pattern? Splendid, no need to break out a more sophisticated tool. Communicating with an audience that’s less mathy? Maybe a proportion shift will be easier to explain. You have lots of noisy data and aren’t finding a clear signal? Time to move to JSD and RTD.

All of these tools only concern individual word counts. When identifying patterns in text we are often interested in word associations, or clustering many similar words together into topic categories. Tools for these tasks, like Latent Dirichlet allocation and topic modeling with Stochastic Block Models, are out of scope for this post. Word embeddings, and ultimately transformer models from Bert to ChatGPT, are extremely out of scope. Maybe next time.

Academic Funding on Fire

Posted 5/17/2025

I’m now a postdoc involved in applying for research funding, and have more insight into the process than I did as a graduate student. Given horrifying cuts to funding throughout academia, I wanted to share my perspective with non-academic readers.

Where Does Research Funding Come From?

In corporate research and development, funding comes from the business’ revenue or investors and is driven by product development. In academia, things are a little more varied. Academic funding typically comes from government grants, philanthropists, and corporate partnerships.

Government grants come from agencies like the National Science Foundation (NSF), National Institutes for Health (NIH), Department of Energy (DoE), Department of Defense (DoD), and so on. Each agency has a broad mandate, issues calls for projects on topics within their mandate, and awards money to project proposals they find promising.

Philanthropists include the Gates Foundation, the Chan-Zuckerberg Initiative, the Sloan Foundation, and other charitable groups. These groups often have much narrower mandates than their federal counterparts - for example, the National Science Foundation funds fundamental research and education that they hope will lay the groundwork for future applied studies, while philanthropic charities are likely to fund more immediately applied work related to eradicating disease or other topics of interest.

Corporate partnerships are often narrower and more directly applied than even philanthropic funding. For example, Google funded some of my research into the longevity of open source software. Many Google products are built upon open source software, and they want to be confident that those building blocks are being actively maintained and aren’t likely to be abandoned due to lack of funding or founder burnout. Clear utility to them, of interest to us, win-win.

While the prominence of funding sources varies between fields, in most disciplines the vast majority of funding comes from government grants.

What do Grants Look Like?

Scientists apply for grants by proposing a project or series of projects under an advertised call for proposals. Along with the description of the research and an argument for why it’s valuable, each grant has a budget including:

-

Salaries for professors, postdocs, graduate students, and other research staff working on the project

-

Equipment (computers, software, fancy microscopes, you name it)

-

Travel and publishing costs (for fieldwork, attending conferences, open-access publication fees, and so on)

Once research staff have their salaries covered by a grant, they may work on additional projects without funding. This is entirely normal for letting students explore their own interests, and helps with future grant funding. Scientists often apply for grants to support projects they started unpaid, using their preliminary results to justify funding and allocating more resources to the work. In this way scientists are always a little out of sync, using existing grant funding to support both the grant’s work and the groundwork for the next grant.

Indirect Costs

Before the scientists apply for a grant, the university amends the budget to add indirect costs. This overhead covers expenses that support all research indirectly but don’t fit neatly into an individual research budget. For example, building maintenance, and covering human resources, accountants, janitorial staff, electricity, and so on. These indirect costs vary between universities, but often exceed 50% - meaning half the grant money awarded will go to the university rather than the specific research project.

Philanthropists often put strict limits on indirect costs, frequently 15%. If your mission is to eradicate Ebola, you don’t want to report back to Bill and Melinda Gates that half your budget went to university administrators and libraries. This puts universities in an awkward position - they need those indirect costs to cover operations, but don’t want to turn down free money from a private foundation. The typical compromise is to use indirect costs from federal grants to cover the university’s overhead needs, then take what they can get from the philanthropists.

It’s all Burning Down

The Trump administration has frozen (then unfrozen, then refrozen, then-) grant awards from the National Institutes for Health and the National Science Foundation. They’ve cut countless existing grants, retracting already awarded funding mid-project.

Academics are pivoting from federal funding to philanthropy and corporate partnerships, but this presents two problems: first, there isn’t nearly enough private funding to replace federal shortfalls, and second, these grants often have the aforementioned 15% indirect cost cap, and so provide much less money to universities.

More recently, Republicans in Congress proposed capping federal grant indirect costs at 15% under the argument that universities clearly get by on low indirect costs from charities, so why can’t they do the same with government grants? This of course misses that government grant overheads have been supplementing charity grants, and so capping federal indirect costs would be devastating.

In any case, the academic funding situation is dire. Even if these policies were all reversed tomorrow, there’s a long lead time on starting new studies, hiring scientists, and some research (especially medical studies) can’t just be paused and restarted with fluctuating funding. Add to that all the scientists leaving the United States to seek more reliable work elsewhere, and American academia is committed to a downward spiral for quite a while.

Dungeon Generation with Binary Space Partitioning

Posted 5/11/2025

It’s a lazy Sunday afternoon, and I’ve been thinking about procedural dungeon generation. This is a challenge where we build algorithms to create maps suitable for video- or tabletop-games, such that the maps are random enough to provide some surprise and variety, and constrained enough to produce playable maps with coherent structure. There are many, many approaches to this problem, and today I’m going to write about one of the simplest.

The Setting

Rather than generating forests, caves, or towns, we’re going to generate a traditional dungeon in the style of Rogue: a series of underground rooms, connected by passageways. Here’s one such example from Nethack rendered as traditional ASCII-art, featuring eight rooms, staircases up and down (< and >), our hero (@), and our trusty cat (f):

-----

|...| ------- -----

|...| #.......######################## #.....##

|...| #|.....| # #|...| #

|....##### #|.....-### ------------ ## #|...| #

|...| #####-<....| ### |........... ####|...| #

---.- # |.....| ### ...........| ###----- ###-----------

# ## ------- ## |..........-### ###### #-.........|

# # #####...........| # # |.........|

# # # ## ------------ ## # |.........|

# # ####### # ##### # |.........|

### # #---.---- # #-|-.-# |.........|

--.------ # #|>...f.|## #.....# |.........|

|.......|### #|...@..| # |...| -----------

|........######|.......## |...|

|.......|# ------.- |...|

|........# # -----

---------

So, we need a series of non-overlapping rectangles of a variety of sizes, and paths between the rectangles so as to guarantee any room can be reached from any other. We’d also like some locality: rooms should typically link to other nearby rooms, only rarely producing long corridors that stretch across the map. Other details, like giving corridors twists and dead-ends, we’ll leave off for now in the name of simplicity.

The Algorithm

Today we’ll be using Binary Space Partitioning, a general purpose technique often used in computer graphics, collision-prediction in robotics, and other scenarios where diving up a physical space is useful. Here’s the approach, described in terms of our problem:

-

Begin with a rectangle the size of the entire map

-

Choose whether to cut the rectangle vertically or horizontally

-

Choose a random cut-position along the x- or y-axis (according to step 2) such that the two child-rectangles are both above a minimum size - if no such cut is possible, stop

-

Repeat step two on each child-rectangle

This recursive algorithm lets us break up one big rectangle into several smaller adjoining rectangles. For an arbitrary visualization, here’s a 100x100 rectangle, broken into smaller pieces so long as those pieces are at least 10x10:

We can visualize this rectangle cutting algorithm using a tree, which traces our recursive function calls:

Converting the Graph to a Dungeon

We have adjoining and non-overlapping rectangles, but these don’t look like rooms yet. To generate rooms we want to shrink our rectangles and add some buffer space between neighbors. For each leaf on the original graph, r1 through r12, let’s generate a rectangle with random dimensions between a minimum width and height of 10, and a maximum width and height of its parent rectangle minus two (for the borders). Then we’ll place the new rectangle randomly within the confines of its parent:

Okay, we have rooms! How about corridors? Here we’ll make use of our tree structure: once we’ve reached the bottom of the tree and placed a room in our rectangle, we’ll start returning back up the recursive tree. At each parent node we’ll randomly select a room from each child branch, and add an edge between them.

Here’s an example process for the dungeon we’ve been illustrating:

-

r3 and r4 are sibling rooms at the bottom of a branch, so at the cut node above them we will add an edge between r3 and r4

-

At the parent cut node we’ll select a random room from the left branch (r3 or r4) and a random room from the right branch (only r2 is available) and add an edge between them - we’ve connected r2 and r3

-

At the parent cut node we’ll select a random room from the left (r2, r3, or r4) and a random room from the right (r1) and add an edge between them - we’ve connected r1 and r3

-

At the parent cut node we’ll select a random room from the left (r1 through r4) and a random room from the right (r5 through r12) and add an edge between them - we’ve connected r1 and r7

The result looks like:

Note that a few paths overlap: r6 and r7 are connected, but the path is obscured by r1-r7 and r6-r10. The path from r1 to r7 intersects both r3 and r5. These collisions are desirable in our application, because they increase the diversity of room configurations - r3 has five doors, despite only being explicitly connected to three neighboring rooms.

Adding some Frills

We have a minimum-viable dungeon! Rooms of variable size, in a fully connected graph, mostly connecting nearby rooms to one another. Pretty bare-bones, but not bad for under 70 lines of Python. There’s lots more we could do from here: we could add some turbulence to paths, so that they sometimes zig-zag or move more diagonally between rooms. We could delete a room or two and part of its edges to introduce dead-ends - but we’d need to be careful to delete only rooms with a maximum degree of one to avoid disconnecting parts of the dungeon. We could introduce some shapes besides rectangles - perhaps one room represents a theater or Colosseum and should be elliptical. Until now we have only considered the structure of the map, but we can now add constraints to assign the purpose and contents of each room. Some example rules might include:

-

Rooms of a large size have a chance to be merchant halls or city centers

-

Rooms of moderate size, a skewed width to height ratio, and a maximum of two exits may be barracks

-

Small rooms adjoining a barracks may be an armory

-

Small rooms with only one doorway may be storehouses

-

Moderately sized single-exit rooms rooms may be shops

-

Distant rooms may be goblin dens, and have tunnels rather than corridors

Coloring rooms by their assigned purpose, swapping out r5 for an elliptical town center, and indicating that the path from r3 to r4 should be a roughly hewn tunnel yields a map with slightly more life in it:

Conclusion

Not much in the way of conclusion! This was fun to play with, and my main code is here if anyone wants to take a look.